Playing Video with AVPlayer in SwiftUI

I’ve been experiencing more and more with SwiftUI and I really wanted to see what we can do with video content. Today I’ll share my findings, showing how to play video using AVFoundation in SwiftUI, including some mistakes to avoid.

The best way to play a media on iOS is using AVFoundation, Apple’s framework to play audio or video on any of its platform. I’ll focus on the video only.

As you might guess, there is no video view ready for us to play with. So the first step will be to create a video player with UIKit and bring it to SwiftUI.

UIKit Player View

If you’re not familiar with AVFoundation, we don’t have a “ready to play” player view to use. Or at least, not the way we want it. AVFoundation comes with a AVPlayerViewController which gives you all-in-on UIViewController where you can pass a media to. Otherwise, it’s at a lower level, using AVPlayerLayer at the layer level.

Since we want to stay “hands-on”, it makes sense to use the latter one.

enum PlayerGravity {

case aspectFill

case resize

}

class PlayerView: UIView {

var player: AVPlayer? {

get {

return playerLayer.player

}

set {

playerLayer.player = newValue

}

}

let gravity: PlayerGravity

init(player: AVPlayer, gravity: PlayerGravity) {

self.gravity = gravity

super.init(frame: .zero)

self.player = player

self.backgroundColor = .black

setupLayer()

}

func setupLayer() {

switch gravity {

case .aspectFill:

playerLayer.contentsGravity = .resizeAspectFill

playerLayer.videoGravity = .resizeAspectFill

case .resize:

playerLayer.contentsGravity = .resize

playerLayer.videoGravity = .resize

}

}

required init?(coder: NSCoder) {

fatalError("init(coder:) has not been implemented")

}

var playerLayer: AVPlayerLayer {

return layer as! AVPlayerLayer

}

// Override UIView property

override static var layerClass: AnyClass {

return AVPlayerLayer.self

}

}

To bring AVPlayerLayer closer to us, I override the layer class of the UIView to give us what we want. The rest is accessors around it. Finally, I introduced a PlayerGravity that will be helpful to quickly resize our player content when using with SwiftUI.

As you can see, the view doesn’t hold any logic, no player controller, not play / pause, it’s only here for rendering.

We can move to bring this view to SwiftUI.

SwiftUI Representation

To expose our PlayerView to SwiftUI, we need to implement a specific protocol: UIViewRepresentable. I’ve created a separate class for this since this would be consume on SwiftUI only.

final class PlayerContainerView: UIViewRepresentable {

typealias UIViewType = PlayerView

let player: AVPlayer

let gravity: PlayerGravity

init(player: AVPlayer, gravity: PlayerGravity) {

self.player = player

self.gravity = gravity

}

func makeUIView(context: Context) -> PlayerView {

return PlayerView(player: player, gravity: gravity)

}

func updateUIView(_ uiView: PlayerView, context: Context) { }

}

Very similar to our previous class, this object is only here to create a representation of PlayerView. Once again, no logic, we only pass player along to the view. I’ll come back in the last part why we shouldn’t put logic there.

Our container is ready to provide a view for us, we can dive into the SwiftUI part.

SwiftUI Implementation

struct ContentView: View {

let model: PlayerViewModel

init() {

model = PlayerViewModel(fileName: "video")

}

var body: some View {

PlayerContainerView(player: model.player, gravity: .center)

}

The implementation stays really simple on the body part. We create a container view and pass along our settings. What interests us is on the PlayViewModel.

class PlayerViewModel: ObservableObject {

let player: AVPlayer

init(fileName: String) {

let url = Bundle.main.url(forResource: fileName, withExtension: "mp4")

self.player = AVPlayer(playerItem: AVPlayerItem(url: url!))

self.play()

}

func play() {

let currentItem = player.currentItem

if currentItem?.currentTime() == currentItem?.duration {

currentItem?.seek(to: .zero, completionHandler: nil)

}

player.play()

}

func pause() {

player.pause()

}

}

Since my asset is part of the app bundle, I preload it when constructing the element, then starting playing it.

That’s it.

When creating ContentView, it will pass the player (that we know the media works) to its subviews. The player state stays with PlayerViewModel. You won’t see it play in Xcode Canvas (which is expected) but if you launch it on a simulator of physical device, you’ll get your video playing.

Wait, that’s it? I thought it would be much harder.

Yes. Pretty neat, right?

We haven’t covered the player controls or state in case you play a remote file, so it’s straightforward. Let me dive a bit more into the controls and the way to propagate actions.

SwiftUI Player Controls

Let’s focus on two actions first, play and pause.

enum PlayerAction {

case play

case pause

}

In my very first try of building a video player, I wanted to leave the PlayerView hold the player logic and only exposes actions to it. It looks like that.

class PlayerViewModel: ObservableObject {

let actionSubject = PassthroughSubject<PlayerAction, Never>()

var action: AnyPublisher<PlayerAction, Never> {

return actionSubject.eraseToAnyPublisher()

}

@Published var isPlaying: Bool = false {

didSet {

if isPlaying {

actionSubject.send(.play)

} else {

actionSubject.send(.pause)

}

}

}

// ...

}

struct ContentView: View {

@ObservedObject var model: PlayerViewModel

// ...

var body: Some {

VStack {

PlayerContainerView(filename: self.model.filename, gravity: .center, action: $model.action)

Button(action: {

self.model.isPlaying.toggle()

}, label: {

Image(systemName: self.model.isPlaying ? "pause" : "play")

.padding()

})

}

}

The idea was fairly simple, toggling the state of PlayerViewModel will trigger a new action, this action would be listened to PlayerView and play or pause accordingly.

Unfortunately, I had many issues with this solution, mostly because from my understanding SwiftUI views should be stateless and not hold data: every switch on the player was actually recreating a PlayerContainerView as well as a PlayerView. It wasn’t holding it’s previous state.

This is probably something I was missing before. It explains why SwiftUI View often observes item changes rather than calling internal function to populate its own data, those functions are probably called at more often that needed.

So, how can I include player controls?

Well, the solution can be simpler.

I expose a boolean in PlayerViewModel, something that the ContentView can switch. Since it holds AVPlayer then it doesn’t require more changes, the cascading views will reflect the changes.

class PlayerViewModel: ObservableObject {

let player: AVPlayer

init(fileName: String) {

let url = Bundle.main.url(forResource: fileName, withExtension: "mp4")

self.player = AVPlayer(playerItem: AVPlayerItem(url: url!))

play()

}

@Published var isPlaying: Bool = false {

didSet {

if isPlaying {

play()

} else {

pause()

}

}

}

func play() {

let currentItem = player.currentItem

if currentItem?.currentTime() == currentItem?.duration {

currentItem?.seek(to: .zero, completionHandler: nil)

}

player.play()

}

func pause() {

player.pause()

}

}

I guess it’s still a good news, you don’t need to include Combine framework into the loop. If you care about the state of your player, you’ll have to observe it using KVO. Maybe it’s for the best to avoid stacking complexity.

SwiftUI Player Enhancements

Stepping back for a second from this code, it seems we haven’t made much progress. Sure, it displays a simple player, but did we need SwiftUI for this?

Well, I’m coming to the point.

Now, that we have a view that play videos, we can take advantages of SwiftUI modifiers to enhance the player experience.

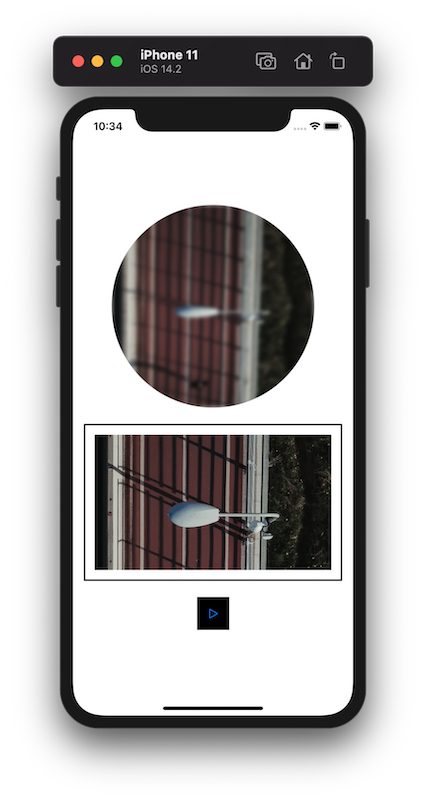

For instance, I can create a clip my video and includes a blur effect really easily.

PlayerContainerView(player: model.player, gravity: .center)

.frame(width: 300, height: 300)

.blur(radius: 3.0)

.clipShape(Circle())

I can also include a border with a padding to fake a TV monitor (why not?) and includes an overlay for better background effect.

PlayerContainerView(player: model.player, gravity: .aspectFill)

.frame(height: 200)

.overlay(Color.black.opacity(0.1))

.padding()

.border(Color.black, width: 2)

.padding()

We could imaging an overlay or blur effect based on fast forward / backward action, there are plenty more options to play with.

This code is available on Github as videoSample. Video credits cottonbro.

In conclusion, we saw how to create a custom view and how to expose it to SwiftUI code. It’s also important to understand each layer of logic to avoid complexity when possible like in my first attempt to pair Combine and KVO before realizing it wasn’t necessary.

In my opinion, the most interesting part in this experiment is all the possibilities that SwiftUI can bring to AVPlayer for custom effect and make it a really great experience on Apple devices.