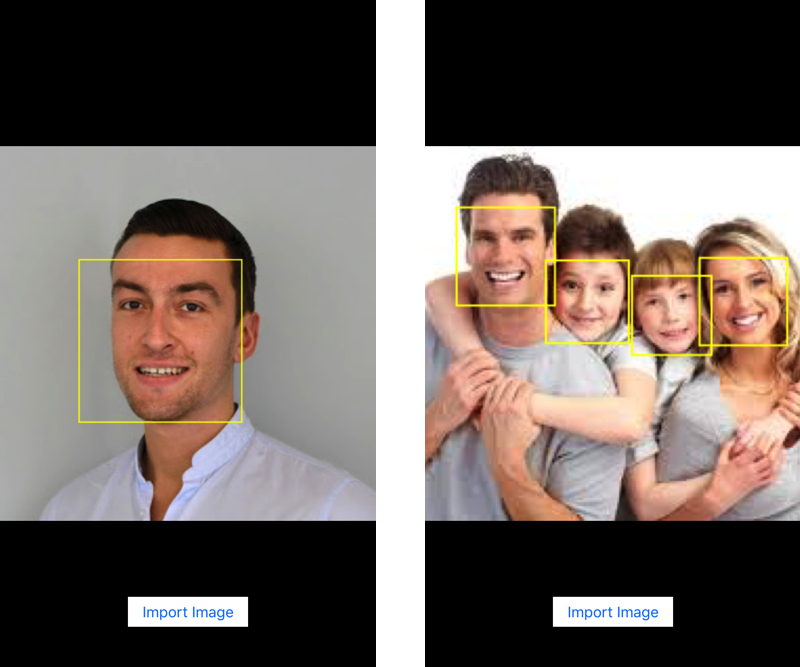

Face detection in iOS with Core ML and Vision in Swift

With iOS11, Apple introduced the ability to integrate machine learning into mobile apps with Core ML. As promising as it sounds, it also has some limitations, let’s discover it around a face detection sample app.

Couple years ago, Apple added Metal framework for graphic optimisation and calculation performance. For iOS11, the company built Core ML on top of it as a foundation framework for machine learning.

UPDATE - April 2020: This post has been updated for Swift 5.

When we talks about machine learning in iOS, it’s actually integrating trained models into mobile apps, there is no learning process here.

At the top of the stack, we have the framework Vision, based on CoreML for image analysis. That’s what I’m going to use to create a face detection iOS app, I actually don’t need to use a trained model for that.

Implementation

Assuming we already have an UIImage ready to use, we are going to start by creating a request to detect faces. Vision already includes two for that, VNDetectFaceRectanglesRequestfor faces, VNDetectFaceLandmarksRequestfor face features.

We also need a request handler which require a specific image format and orientation to be executed: CIImage and CGImagePropertyOrientation.

Finally our request has to be dispatched to another queue to avoid locking the UI during the detection.

At that time here is what my code looks like

lazy var faceDetectionRequest: VNDetectFaceRectanglesRequest = {

let faceLandmarksRequest = VNDetectFaceRectanglesRequest(completionHandler: { [weak self] request, error in

self?.handleDetection(request: request, error: error)

})

return faceLandmarksRequest

}()

func launchDetection(image: UIImage) {

let orientation = image.coreOrientation()

guard let coreImage = CIImage(image: image) else { return }

DispatchQueue.global().async {

let handler = VNImageRequestHandler(ciImage: coreImage, orientation: orientation)

do {

try handler.perform([self.faceDetectionRequest])

} catch {

print("Failed to perform detection .\n\(error.localizedDescription)")

}

}

}

It’s important to run detection on a separated thread. Executed on main thread, the app will get stuck.

Under my request completion handler, let’s log how many faces we detected. To do so, the result would be return as an array of VNFaceObservation.

guard let observations = request.results as? [VNFaceObservation] else {

fatalError("unexpected result type!")

}

print("Detected \(observations.count) faces")

Now we can detect faces, by drawing a square following the position given under VNFaceObservation. To achieve this, there are couple extra requirements. One is to actually scale and translate it based on the image height, something I discovered on Stack Overflow.

I created a sample iOS app for my testing following that process, available under VisionSample in Github.